Telemetry

The objective of the telemetry feature is to gather metrics and establish an infrastructure for visualizing valuable network insights. Some of the metrics that we at the Interledger Foundation collect include:

- The total amount of money transferred via packet data within a specified time frame (daily, weekly, monthly)

- The number of transactions that have been at least partially successful

- The number of ILP packets flowing through the network

- The average amount of money held within the network per transaction

- The average time it takes for an outgoing payment to complete

Our goals are to:

- Track the growth of the network in terms of transaction sizes and the number of transactions processed

- Use the data for our own insights

- Enable you to gain your own insights

Privacy is a paramount concern for the Interledger Foundation. Rafiki’s telemetry feature is designed to provide valuable network insights without violating privacy or aiding malicious ASEs. Review the Privacy section below for more information.

The telemetry feature is currently enabled by default on test environments (environments not dealing with real money). When active, the feature transmits metrics to the testnet collector. You can opt in to sharing your metrics with a livenet collector when operating in a production livenet environment (with real money). Regardless of environment, you can also opt-out of telemetry completely. Review the telemetry environment variables for more information.

The Interledger Foundation has adopted OpenTelemetry (OTEL) to ensure compliance with a standardized framework that is compatible with a variety of tool suites. OTEL allows you to use your preferred tools for data analysis, while Rafiki is instrumented and observable through a standardized metrics format.

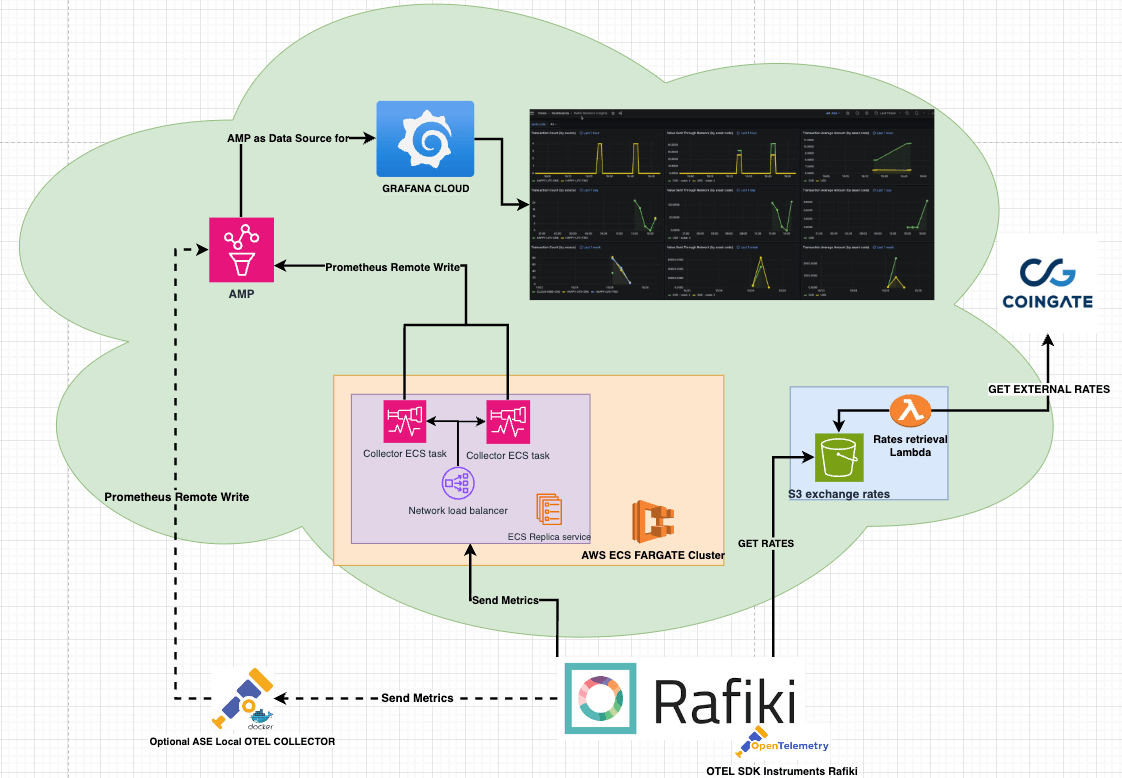

Telemetry Elastic Container Service (ECS) cluster

Section titled “Telemetry Elastic Container Service (ECS) cluster”The Telemetry Replica service is hosted on AWS ECS Fargate and is configured for availability and load balancing of custom ADOT (AWS Distro for OpenTelemetry) Collector ECS tasks.

When you opt for telemetry, metrics are sent to our Telemetry service. To enable you to build your own telemetry solutions, instrumented Rafiki can send data to multiple endpoints. This allows for the integration of a local OTEL Collector container that can support custom requirements. Metrics communication is facilitated through gRPC .

The OTEL SDK is integrated into Rafiki to create, collect, and export metrics. The SDK integrates seamlessly with the OTEL Collector.

The Interledger Foundation uses Amazon Managed Service for Prometheus (AMP) to collect data from the telemetry cluster.

Grafana Cloud is used for data visualization dashboards and offers multiple tools that extend Prometheus Promql.

For telemetry purposes, all amounts collected by instrumented Rafiki should be converted to a base currency.

If an ASE does not provide the necessary exchange rate for a transaction, the telemetry solution still converts the amount to the base currency using external exchange rates. A Lambda function on AWS retrieves and stores the external exchange rates. The function is triggered by a daily CloudWatch event and stores the rates in a public S3 bucket. The S3 bucket does not have versioning, and the data is overwritten daily to further ensure privacy.

Rafiki has the following metrics. All data points (counter increases) are exported to collection endpoints at a configurable interval. The default interval is 15 seconds.

| Metric | Type | Description | Behavior |

|---|---|---|---|

transactions_total | Counter | Count of funded outgoing transactions | Increases by 1 for each successfully funded outgoing payment resource |

packet_count_prepare | Counter | Count of ILP Prepare packets that are sent | Increases by 1 for each Prepare packet that’s sent |

packet_count_fulfill | Counter | Count of ILP Fulfill packets | Increases by 1 for each Fulfill packet that’s received |

packet_count_reject | Counter | Count of ILP Reject packets | Increases by 1 for each Reject packet that’s received |

packet_amount_fulfill | Counter | Amount sent through the network | Increases by the amount sent in each ILP packet |

transaction_fee_amounts | Counter | Fee amount sent through network | Increases by the amount sent minus the amount received for an outgoing payment |

ilp_pay_time_ms | Histogram | Time to complete an ILP payment | Records the time taken to make an ILP payment |

The current implementation only collects metrics on the SENDING side of a transaction. Metrics for external Open Payments transactions RECEIVED by a Rafiki instance in the network are not collected.

Rafiki telemetry is designed with a strong emphasis on privacy. The system anonymizes user data and refrains from collecting identifiable information. Since transactions can originate from any user to a Rafiki instance, the privacy measures are implemented directly at the source (each Rafiki instance). This means that at the individual level, the data is already anonymous as single Rafiki instances service transactions for multiple users.

Differential privacy and local differential privacy (LDP)

Section titled “Differential privacy and local differential privacy (LDP)”Differential privacy is a system for publicly sharing information about a dataset by describing the patterns of groups within the dataset while withholding information about individuals in the dataset. Local differential privacy (LDP) is a variant of differential privacy where noise is added to each individual’s data point before the data point is sent to the server. This ensures that the server never sees the actual data, providing a strong privacy guarantee.

Rafiki’s telemetry implementation uses a rounding technique that essentially aggregates multiple transactions into the same value, making them indistinguishable. This is achieved by dividing the transaction values into buckets and rounding the values to the nearest bucket.

The bucket size is calculated based on the raw transaction value. For lower value transactions, which are expected to occur more frequently, the bucket sizes are determined linearly for higher granularity. However, after a certain threshold, the bucket size calculation switches to a logarithmic function to ensure privacy for higher value transactions (which are less frequent but pose greater privacy concerns).

To handle outliers, a clipping technique is implemented, capping the buckets. Any value that exceeds a given threshold is placed in a single bucket. Conversely, any value that falls below a certain minimum is also placed in a single bucket. This ensures that both high and low outliers do not disproportionately affect the overall data, providing further privacy guarantees for these transactions.

The Laplacian distribution is often used in differential privacy due to its double exponential decay property. This property ensures that a small change in the data does not significantly affect the probability distribution of the output, providing a strong privacy guarantee.

To achieve local differential privacy (LDP), noise is selected from the Laplacian distribution and added to the rounded values. The noise is generated based on a privacy parameter, which is calculated using the sensitivity of the function.

The sensitivity of a function in differential privacy is the maximum amount that any single observation can change the output of the function. In this case, the sensitivity is considered to be the maximum of the rounded value and the bucket size.

The privacy parameter is computed as one-tenth of the sensitivity. This parameter controls the trade-off between privacy and utility: a smaller privacy parameter means more privacy but less utility, and a larger privacy parameter means less privacy but more utility.

The noise, selected from the Laplacian distribution, is then generated using this privacy parameter and added to the rounded value. If the resulting value is zero, the value is set to half the bucket size to ensure that the noise does not completely obscure the transaction value.

Another factor that obscures sensitive data is currency conversion. In cross-currency transactions, exchange rates are provided by you, as the ASE, internally. As such, the exchange rates cannot be correlated to an individual transaction. If you don’t or can’t provide the necessary rates, an external API for exchange rates is used. The obtained exchange rates are overwritten frequently in this case, with no versioning or history access. This introduces an additional layer of noise and further protects the privacy of the transactions.

Experimental transaction values when using the algorithm

Section titled “Experimental transaction values when using the algorithm”The following table shows the values in the algorithm when running transactions for different amounts. The raw value increases as you move down the rows of the table. All values are in scale 4.

| Raw value | Bucket size | Rounded value | Privacy parameter | Laplace noise | Final value |

|---|---|---|---|---|---|

| 8300 | 10000 | 10000 | 1000 | 2037 | 12037 |

| 13200 | 15000 | 15000 | 1500 | 1397 | 16397 |

| 147700 | 160000 | 160000 | 16000 | -27128 | 132872 |

| 1426100 | 2560000 | 2560000 | 256000 | -381571 | 2178429 |

| 1788200 | 2560000 | 2560000 | 256000 | 463842 | 3023842 |

| 90422400 | 10000000 | 90000000 | 1000000 | 2210649 | 92210649 |

| 112400400 | 10000000 | 100000000 | 1000000 | 407847 | 100407847 |

| 222290500 | 10000000 | 100000000 | 1000000 | -686149 | 99313851 |

Rafiki’s telemetry solution is a combination of techniques described in various white papers on privacy-preserving data collection. More information can be found in the following papers:

- Local differential privacy for human-centered computing

- Collecting telemetry data privately

- RAPPOR: Randomized aggregatable privacy-preserving ordinal response

Rafiki allows you to build your own telemetry solution based on the OpenTelemetry (OTEL) standardized metrics format that Rafiki exposes.

You must deploy your own OTEL Collector that acts as a sidecar container to Rafiki, then provide the OTEL Collector’s ingest endpoint so that Rafiki can begin sending metrics to the collector.

When the ENABLE_TELEMETRY variable is true, the following are required.

| Variable name | Type | Description |

|---|---|---|

INSTANCE_NAME | String | Your Rafiki instance’s name used to communicate for telemetry and auto-peering. For telemetry, it’s used to distinguish between the different instances pushing data to the telemetry collector. |

| Variable name | Type | Description |

|---|---|---|

ENABLE_TELEMETRY | Boolean | Enables the telemetry service on Rafiki. Defaults to true. |

LIVENET | Boolean | Determines where to send metrics. Defaults to false, resulting in metrics being sent to the testnet OTEL Collector. Set to |

OPEN_TELEMETRY_COLLECTOR_URLS | String | A CSV of URLs for OTEL Collectors (e.g., http://otel-collector-NLB-e3172ff9d2f4bc8a.elb.eu-west-2.amazonaws.com:4317,http://happy-life-otel-collector:4317). |

OPEN_TELEMETRY_EXPORT_INTERVAL | Number | Indicates, in milliseconds, how often the instrumented Rafiki instance should send metrics. Defaults to15000. |

TELEMETRY_EXCHANGE_RATES_URL | String | Defines the endpoint Rafiki queries for exchange rates. Used as a fallback if/when exchange rates aren’t provided. When set, the response format of the external exchange rates API should be of type Defaults to |

Example Docker OTEL Collector image and configuration

Section titled “Example Docker OTEL Collector image and configuration”Below is an example of a Docker OTEL Collector image and configuration that integrates with Rafiki and sends data to a Prometheus remote write endpoint.

You can test the configuration in our Local Playground by providing the environment variables in the preceding table to happy-life-backend in the docker-compose.yml file.

# Serves as example for optional local collector configurationhappy-life-otel-collector: image: otel/opentelemetry-collector-contrib:latest command: ['--config=/etc/otel-collector-config.yaml', ''] environment: - AWS_ACCESS_KEY_ID=${AWS_ACCESS_KEY_ID-''} - AWS_SECRET_ACCESS_KEY=${AWS_SECRET_ACCESS_KEY-''} volumes: - ../collector/otel-collector-config.yaml:/etc/otel-collector-config.yaml networks: - rafiki expose: - 4317 ports: - '13132:13133' # health_check extensionSupplemental documentation is available in OTEL’s Collector Configuration documentation.

# Serves as example for the configuration of a local OpenTelemetry Collector that sends metrics to an AWS Managed Prometheus Workspace# Sigv4auth required for AWS Prometheus Remote Write access (USER with access keys needed)

extensions: sigv4auth: assume_role: arn: 'arn:aws:iam::YOUR-ROLE:role/PrometheusRemoteWrite' sts_region: 'YOUR-REGION'

receivers: otlp: protocols: grpc: http: cors: allowed*origins: - http://* - https://\_

processors: batch:

exporters: logging: verbosity: 'normal' prometheusremotewrite: endpoint: 'https://aps-workspaces.YOUR-REGION.amazonaws.com/workspaces/ws-YOUR-WORKSPACE-IDENTIFIER/api/v1/remote_write' auth: authenticator: sigv4auth

service: telemetry: logs: level: 'debug' metrics: level: 'detailed' address: 0.0.0.0:8888 extensions: [sigv4auth] pipelines: metrics: receivers: [otlp] processors: [batch] exporters: [logging, prometheusremotewrite]